Why Serverless GPUs Are Reshaping CapEx: From 40–90 % Infrastructure Savings To 10× ROI for AI Workloads

Lately, we’ve been getting a surge of questions about Serverless GPUs — what they are, how they differ from traditional GPU-as-a-Service, and most importantly, what the ROI looks like. Across our client base, there’s growing demand for quantitative frameworks that compare serverless GPU deployments against always-on clusters, measuring utilization-driven savings and time-to-scale advantages.

When we published our CoreWeave analysis back in May, the stock was trading around $45. Since then, it has soared by 168%. In that same piece, we also examined the broader GPU-as-a-Service (GaaS) market and CoreWeave’s strategic deal with OpenAI — one of the first signals that GPU infrastructure itself was becoming a profit center, not a commodity.

That thesis is now being validated across the ecosystem. In September, Microsoft signed a $17.4 billion agreement with Nebius to secure long-term GPU capacity for its AI operations — with upside provisions taking the total closer to $19.4 billion. Markets reacted instantly: Nebius stock jumped nearly 47% pre-market on the announcement.

Meanwhile, on the inference side, Together AI has been on a rapid climb. The company now carries a $3.3 billion valuation, reports $50 million in 2024 revenue, and is guiding toward $120 million for 2025 — proof that serverless inference APIs can scale into real enterprise revenue.

All of this is reinforcing the CAPEX forecasts we’ve seen from Citi and others, who continue to project a multi-trillion-dollar AI infrastructure super-cycle. Citi recently raised its estimate for cumulative AI-related infrastructure spending by major tech companies to $2.8 trillion through 2029, up from $2.3 trillion earlier this year. The same report forecasts AI revenues hitting $780 billion by 2030, up from $43 billion today — underscoring how under-modeled this sector still is.

As Citi’s analysts put it:

“Our conversations with CIOs and CTOs reflect a similar increase in urgency around adoption at the enterprise level. Based on this, we are raising our 2026E forecast for AI CAPEX across hyperscalers from $420 billion to $490 billion, and through 2029 from $2.3 trillion to $2.8 trillion.”

Those forecasts align closely with new data from Dell’Oro Group, which shows CAPEX growth across AI data centers accelerating at a double-digit CAGR well into the next decade.

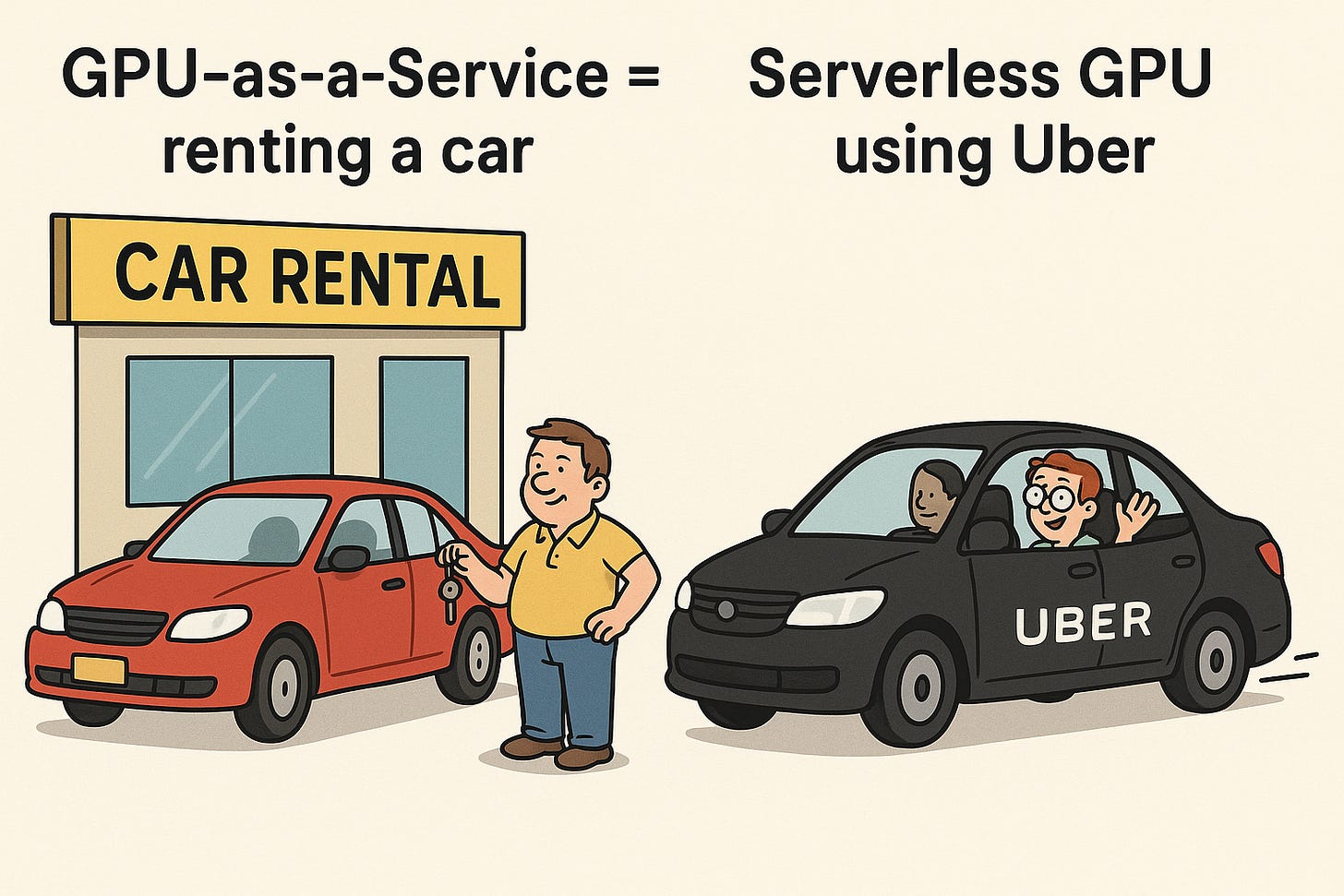

So while “Serverless GPUs” might sound like a close cousin of “GPU-as-a-Service,” it’s actually a different operating model — with its own economics, scaling behavior, and cost levers. That’s where we’ll focus today’s piece:

Operating Model Deep Dive: Serverless GPU vs. GPU-as-a-Service

Benchmarking the Serverless GPU Landscape

Economic KPIs – pricing, utilization efficiency, and unit cost

Technical / Operational KPIs – latency, cold-start performance and scaling

Use Case Spotlight: How Substack Leverages Modal AI

ROI Calculator for Serverless GPU Migrations: A structured framework to estimate cost savings from migrating to serverless GPU environments, using two modeled workloads:

a. Case A — Small / Spiky Load (Modal Serverless)

b. Case B — Large / Steady Load (Together AI Dedicated / Hybrid Serverless)

We’ve sketched the economics of serverless GPU adoption into a hands-on Excel model that lets you simulate your own workloads. The tool compares traditional provisioned GPU costs with serverless pricing, accounting for utilization, concurrency, and idle overhead.

You can tweak sliders for GPU hours, utilization %, and burst frequency to see how the break-even point shifts between Case A (small, spiky workloads) and Case B (large, steady inference pipelines). The output updates instantly to show total monthly cost and ROI making it easy to visualize the financial impact of going serverless before running a single experiment.

Please note: The insights presented in this article are derived from confidential consultations our team has conducted with clients across private equity, hedge funds, startups, and investment banks, facilitated through specialized expert networks. Due to our agreements with these networks, we cannot reveal specific names from these discussions. Therefore, we offer a summarized version of these insights, ensuring valuable content while upholding our confidentiality commitments.