Celestica's Four Strategic Moats and a 99% increase in Revenue: Why Celestica's White Box Switching Is Quietly Powering Gen AI Training at Meta

We know we promised our next piece would cover Elasticsearch—but after a strong wave of inbound questions on Celestica and its role in GenAI infrastructure, we’ve decided to bump it up in the queue. Don’t worry—our deep dive on Elasticsearch is still coming next.

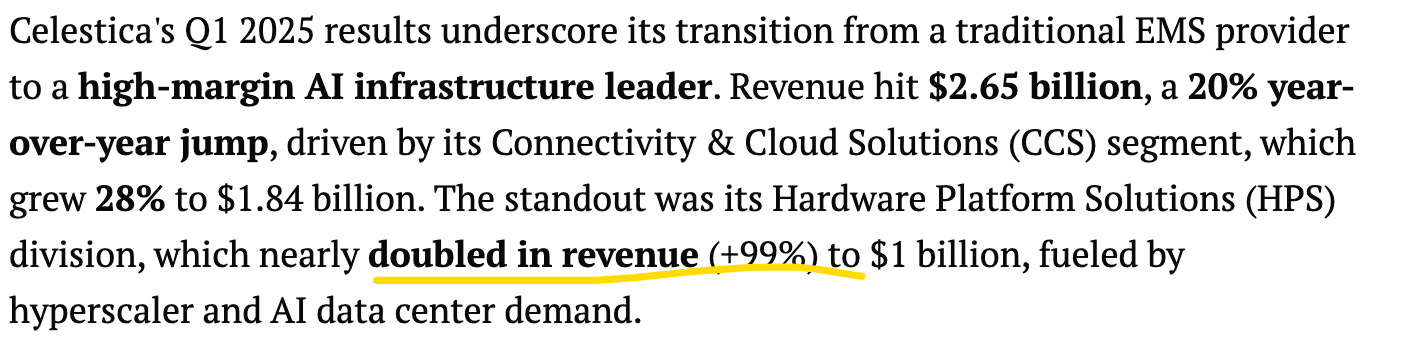

Lately, a lot of our clients have been asking about Celestica—how a company known for low-margin hardware is suddenly showing up in hyperscale AI supply chains. A 99% jump in networking segment revenue reflects more than just cyclical tailwinds—it’s a sign that Celestica is increasingly being pulled into core AI infrastructure design wins.

While historically known as a low-margin ODM, Celestica is now emerging as a critical enabler of hyperscaler AI buildouts, particularly in white-box switching and rack-scale delivery. Clients are asking sharp questions: What’s their moat? Why do Meta and Microsoft buy from them? Can they scale beyond just ToR (Top Of Rack) switching into core spine/leaf switching AI fabrics where traditionally Arista is the leader?

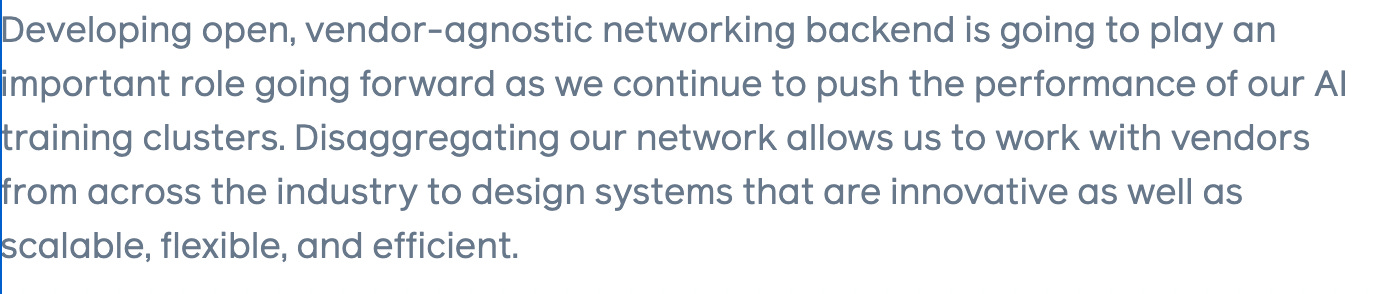

Meta, one of Celestica’s largest AI infrastructure customers, has publicly stated that the future of AI networking at hyperscale data centers will be built on disaggregated, white-box platforms.

This isn’t just a philosophical endorsement of open architectures—it’s a procurement directive. Meta’s own Disaggregated Scheduled Fabric (DSF), which underpins its GenAI training clusters, is built from switches that are based on Broadcom silicon, supplied by partners like Celestica. This validates Celestica not just as a vendor, but as a core execution partner in Meta’s long-term AI fabric roadmap.

In today’s article, we’ll break down how the GenAI networking stack is evolving—and why Celestica is increasingly viewed as a core beneficiary of this shift. We’ll wrap up this blog with a directional revenue model that quantifies what Meta’s AI cluster buildout could mean for Celestica’s switch business—based on public topology data, conservative ASPs, and realistic share assumptions.

Topics include:

GenAI Network Topology & Meta’s Reference Architecture

Comparing Three Network Models: We contrast Chassis-Based Routers, traditional Spine–Leaf, and Distributed Disaggregated Chassis (DDC) to highlight the architectural evolution.

Inside Meta’s DSF: The 4K and 16K GPU Pod Topologies: A dive into Meta’s Disaggregated Scheduled Fabric, including VOQ scheduling, rack-scale design, and vendor implications.

AI Training vs. Inference Data Centers: We explain why training and inference have fundamentally different networking requirements—and what that means for infrastructure buyers.

The Outlook for White-Box, Disaggregated Switching: CAGR, Market projections and how open platforms are gaining traction

Celestica’s Four Strategic Moats (and Who They Compete With)

Directional Revenue Model: What Meta’s AI Cluster Build Means for Celestica: Using Meta’s own topology disclosures, we estimate how many switches are involved and how much revenue could flow to Celestica per generation.

Please note: The insights presented in this article are derived from confidential consultations our team has conducted with clients across private equity, hedge funds, startups, and investment banks, facilitated through specialized expert networks. Due to our agreements with these networks, we cannot reveal specific names from these discussions. Therefore, we offer a summarized version of these insights, ensuring valuable content while upholding our confidentiality commitments.