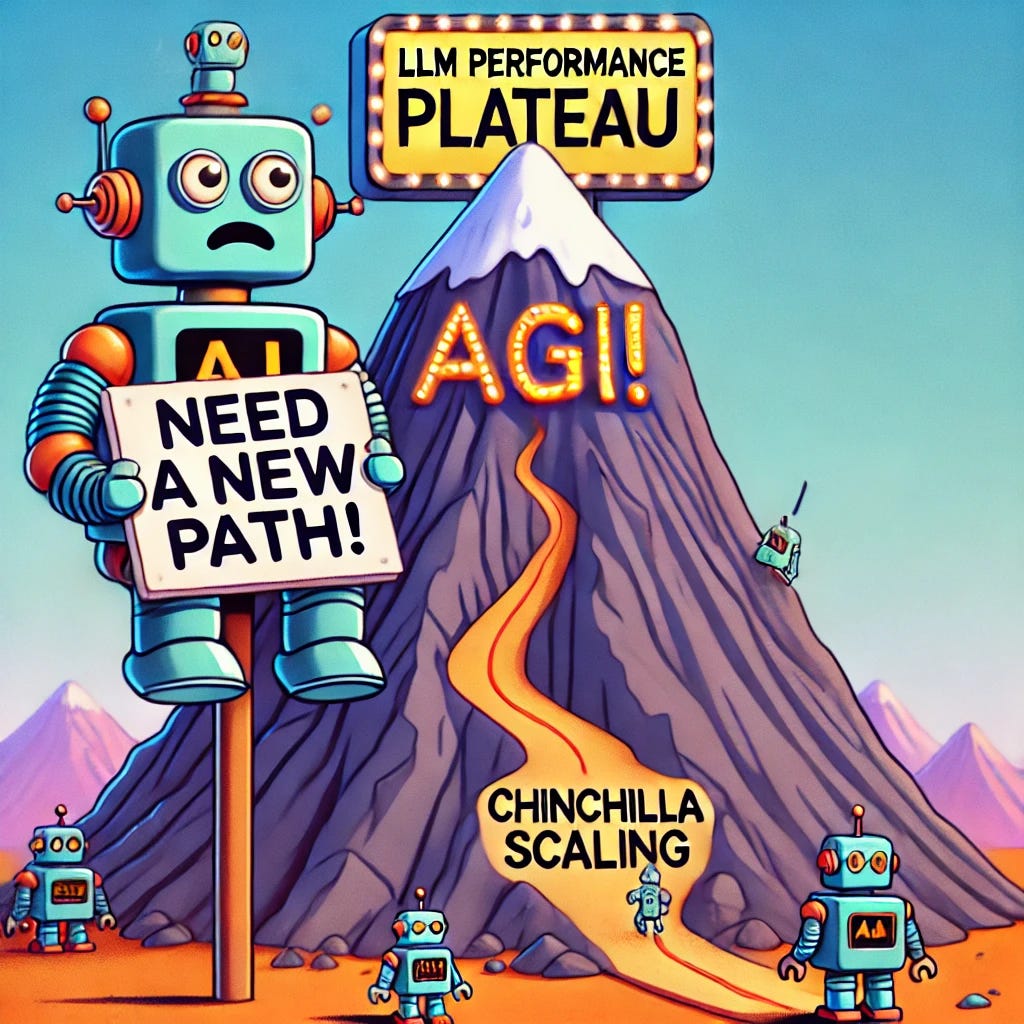

Are LLMs Plateauing in Pre-Training? Industry Impact and Levers to Enhance LLM Performance Further

In recent news, the AI community has been abuzz with discussions about the slowing progress in LLM performance during pre-training. Reports indicate that advancements in LLM capabilities are plateauing in the pre-training phase, prompting industry leaders like OpenAI to shift their strategic focus.

Recent articles says,

Another research by Apple researchers highlights the limitations of LLMs, concluding that LLMs' genuine logical reasoning is fragile.

Our clients have been actively reaching out, expressing concerns and seeking insights into this phenomenon. We even did an investor teleconference on this topic due to its breadth of impact. In light of these events, we are delving deeper into the topic. We will also explore how leading AI companies are getting impacted and adapting their strategies.

Here, we will talk about:

Recent Advances in LLM Scaling

What is Google’s Paper on The Chinchilla Scaling

Are LLMs and the Transformer Architecture Reaching Their Limits?

Industry Impact: Who is Impacted by the Slowing Down of LLM Pre-training?

Our Opinion: Additional Levers to Enhance LLM Performance Further

Please note: The insights presented in this article are derived from confidential consultations our team has conducted with clients across private equity, hedge funds, startups, and investment banks, facilitated through specialized expert networks. Due to our agreements with these networks, we cannot reveal specific names from these discussions. Therefore, we offer a summarized version of these insights, ensuring valuable content while upholding our confidentiality commitments.

Recent Advances in LLM Scaling

Our clients are asking, if model performance in pre-training is plateauing?

Lets unpack this.

Recent article- touches on AI improvement slowing down.

This article discusses the slowing rate of improvement in the foundational building block models. Let’s understand what is Model Plateauing?